Advantages and Limitations of Sign Language Corpora for Sign Language Recognition

Image credit: Authors

Image credit: AuthorsAbstract

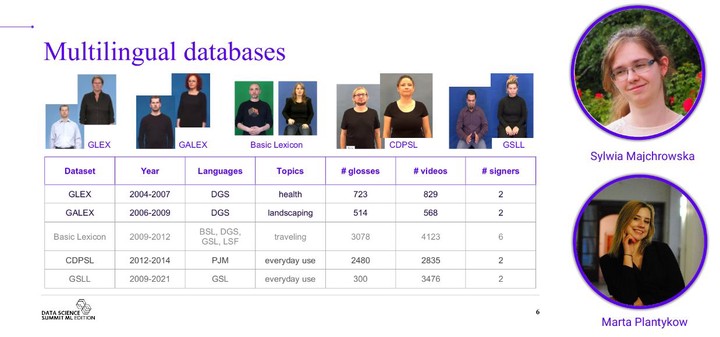

Many people use sign language on a daily basis. Some of them understand the spoken and/or written native language, while others do not. For Deaf people, this presents a huge communication barrier because access to qualified interpreters, due to their low availability and relatively high cost, is difficult. There is no doubt that there is a need to automate the sign language recognition process. A promising method for automating this task is deep learning-based methods. However, they need a large amount of properly labeled training data to perform well. Moreover, different nationalities use different versions of sign language and there is no universal one. In the Hear AI non-profit project, we addressed this problem and succeeded in evaluating different open sign language corpora labeled by linguists in the Hamburg Notation System (HamNoSys). In our solution, we proposed computer-friendly numeric multilabels that greatly simplify the structure of the language-agnostic HamNoSys without significant loss of glos meaning.