Image credit: Authors

Image credit: AuthorsAbstract

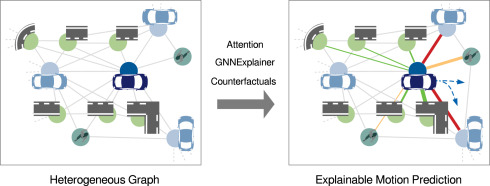

Motion prediction systems play a crucial role in enabling autonomous vehicles to navigate safely and efficiently in complex traffic scenarios. Graph Neural Network (GNN)-based approaches have emerged as a promising solution for capturing interactions among dynamic agents and static objects. However, they often lack transparency, interpretability and explainability — qualities that are essential for building trust in autonomous driving systems. In this work, we address this challenge by presenting a comprehensive approach to enhance the explainability of graph-based motion prediction systems. We introduce the Explainable Heterogeneous Graph-based Policy (XHGP) model based on an heterogeneous graph representation of the traffic scene and lane-graph traversals. Distinct from other graph-based models, XHGP leverages object-level and type-level attention mechanisms to learn interaction behaviors, providing information about the importance of agents and interactions in the scene. In addition, capitalizing on XHGP’s architecture, we investigate the explanations provided by the GNNExplainer and apply counterfactual reasoning to analyze the sensitivity of the model to modifications of the input data. This includes masking scene elements, altering trajectories, and adding or removing dynamic agents. Our proposal advances towards achieving reliable and explainable motion prediction systems, addressing the concerns of users, developers and regulatory agencies alike. The insights gained from our explainability analysis contribute to a better understanding of the relationships between dynamic and static elements in traffic scenarios, facilitating the interpretation of the results, as well as the correction of possible errors in motion prediction models, and thus contributing to the development of trustworthy motion prediction systems.